One of the lessons the school of hard knocks taught us was to be ready for anything. _Anything_

One client space had such a messed up HVAC system that the return air system, which is in the suspended ceiling, didn’t work because most of the vent runs up there were not capped properly. We had quite a few catastrophic failures at that site, as the server closet’s temperatures were always high but especially so in the winter. Fortunately, they moved out of that space a few years ago.

Since we began delivering our standalone Hyper-V solutions, Storage Spaces Direct (S2D) Hyper-Converged or SOFS clusters, and Scale-Out File Server and Hyper-V clusters into Data Centers around the world, we have discovered that there is a distinct difference in Data Centre services quality. As always, “buyer beware” and “We get what we pay for”.

So, what does that mean?

It means that, whether on-premises (“premises” not “premise”), hybrid, or all-in the Cloud we need to be prepared.

For this post, let us focus in on being ready for a standalone system or a cluster node failure.

There are four very important keys to being ready:

- Systems warranties

- 4-Hour Response in a cluster setting

- Next Business Day (NBD) for others is okay

- KVM over IP (KVM/IP) is a MUST

- Intel RMM

- Dell iDRAC Enterprise

- iLO Advanced

- Others …

- Bootable USB Flash Drive (blog post)

- 16GB flash drive

- NTFS formatted ACTIVE

- Most current OS files set up and maintained

- Keep those .WIM files up to date (blog post)

- Either Managed UPS or PDU

- Gives us the ability to power cycle the server’s power supply or supplies.

In our cluster settings, we have our physical DC (blog post) set up for management. We can use that as our platform to get to the KVM/IP to begin our repair and/or recover processes.

This is what the back of our (S2D) cluster looks like now:

The top flash drive is what we have been using for the last few years. A Kingston DTR3 series flash drive.

The bottom one is a Corsair VEGA. We have also been trying out the SanDisk Cruzer Fit that is even smaller than that!

The main reason for the change is to remove that fob sticking off the back or front of the server. In addition, the VEGA or Fit have such a small profile we can ship them plugged in to the server(s) and not worry about someone hitting them once the server is in production.

Here is a quick overview of what we do in the event of a problem:

- Log on to our platform management system

- Open the RMM web page

- log on

- Check the baseboard management controller logs (BMC/IPMI logs page)

- If we see the logs indicate a hard-stop them we’re on to initiate warranty replacement

- If it is in the OS somewhere then we can either fix or rebuild

- Rebuild

- Cluster: Clean up domain AD, DNS, DHCP, and Evict OLD Node from Cluster

- Reset or Reboot

- Function Key to Boot Menu

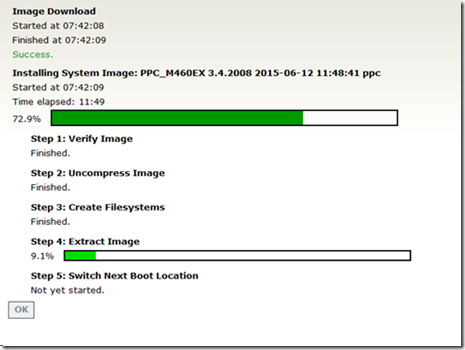

- Boot flash

- Install OS to OS partition/SSD

- Install and configure drivers

- Cluster: Join Domain

- Server name would be the same as downed node

- Update Kerberos Constrained Delegation

- Install and configure the Hyper-V and/or File Services Roles

- Set up networking

- Standalone: Import VMs

- Cluster: Join and Live Migrate on then off to test

Done.

The key reason for the RMM and flash drive? We just accomplished the above without having to leave the shop.

And, across the entire life of the solution if there is a hiccup to deal with we’re dealing with it immediately instead of having to travel to the client site, data centre, or third party site to begin the process.

One more point: This setup allows us to deploy solutions all across the world so long as we have an Internet connection at the server’s site.

There is absolutely no reason to deploy servers without a RMM or desktops/laptops/workstations without vPro. None. Nada. Zippo.

When it comes to getting in to the network, we can use the edge to VPN in or have an IP/MAC filtered firewall rule with RDP inbound to our management platform. One should _never_ open the firewall to a listening RDP port no matter what port it would be listening on.

Philip Elder

Microsoft High Availability MVP

MPECS Inc.

Co-Author: SBS 2008 Blueprint Book

Our Cloud Service

Twitter: @MPECSInc