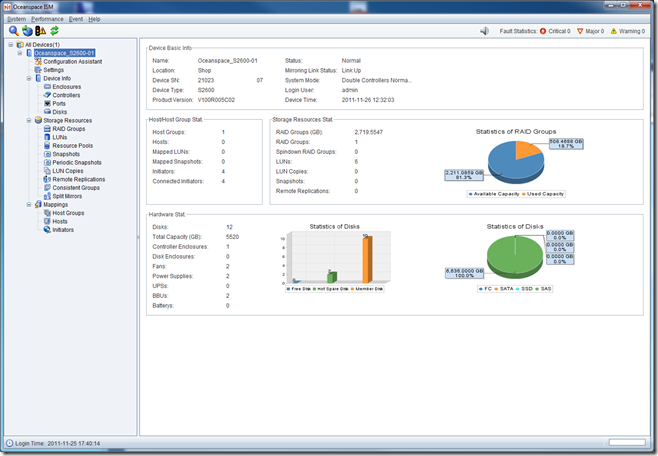

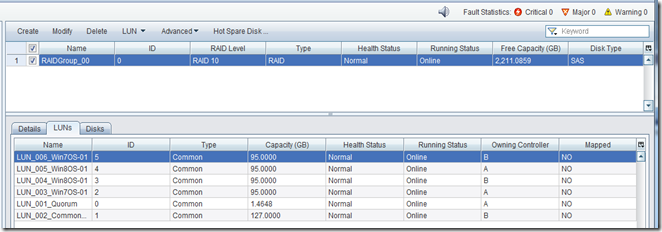

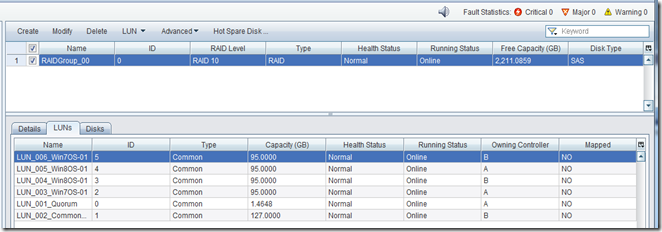

We now have our RAID Group set up with the configuration set at RAID 10.

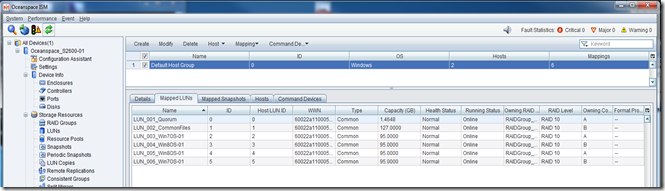

We then went ahead and configured our LUNs:

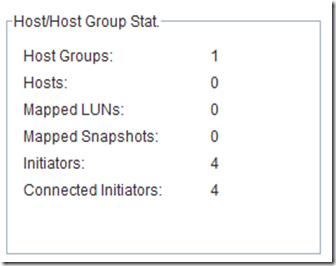

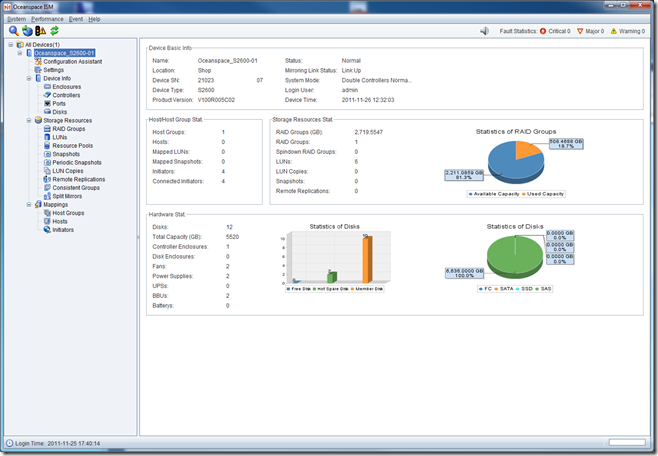

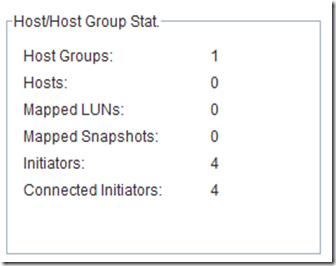

We have our two Intel Server System SR1695GPRX2AC units plugged in and turned on as can be seen under the Host section (4 Initiators).

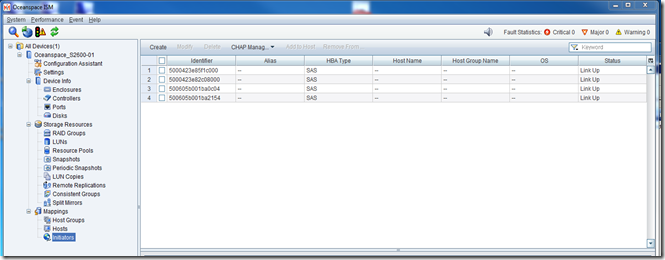

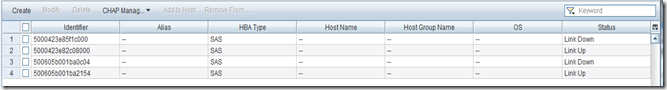

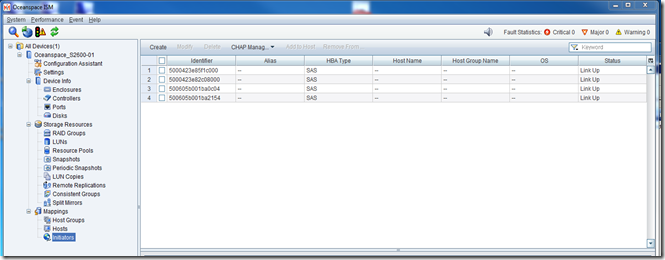

At the bottom of the left hand column under Mappings in the ISM we find our four SAS initiators for the two nodes:

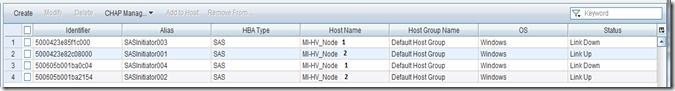

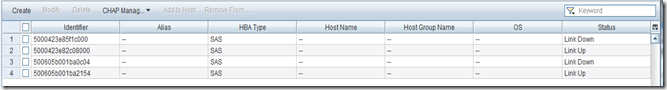

It took a bit to figure out just how to get the SAS IDs mapped to the LUNs. First we shut down the second node so that we would know which IDs belonged to which server:

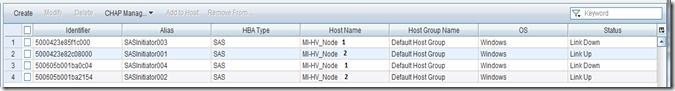

We mapped them to the appropriate node:

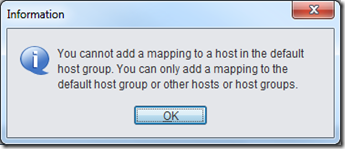

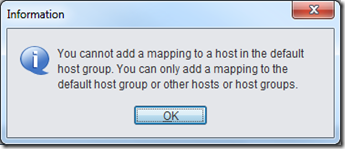

Then we went to map the actual LUNs to the Hosts:

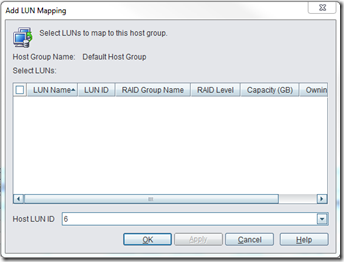

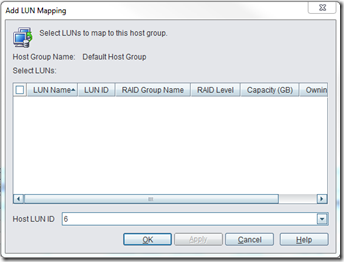

So, we moved to the Host Groups node in the left hand column of the ISM and went through the Add LUN Mapping wizard. Despite the way things looked, when we selected all of the LUNs they were given a sequential LUN ID.

That Host LUN ID drop down list near the bottom is a bit misleading.

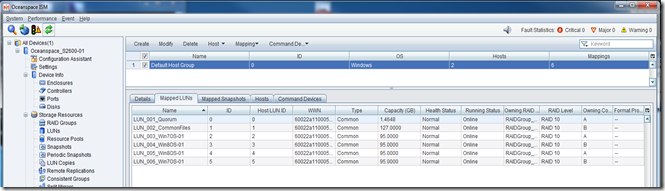

We then had our LUNs mapped to the Default Host Group:

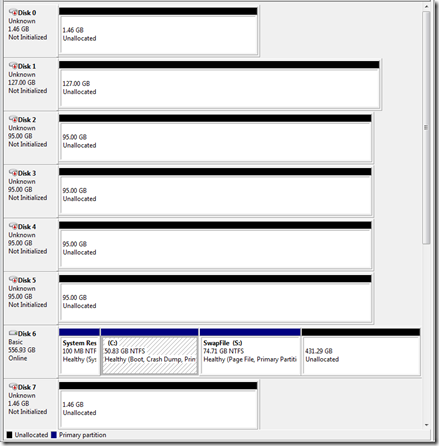

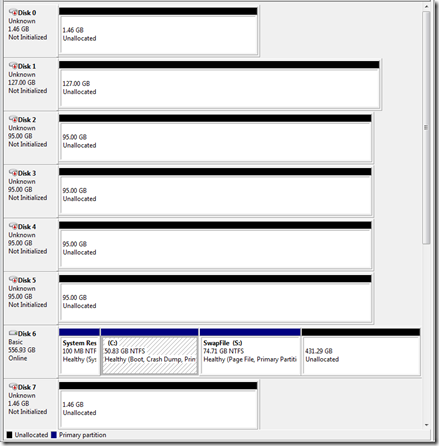

With that the shared storage showed up in both node’s Disk Management!

Now on to setting up MPIO in the Hyper-V node, running the Cluster Validation Wizard, and finally standing up a Hyper-V Failover Cluster for some further testing.

Some Initial Observations

Disk and LUN Configuration

Our primary DAS product has been the Promise VTrak E610sD and E310sD RAID subsystems. So, working with the Oceanspace S2600 has been a bit of a challenge since the management console and set up steps are quite different.

For one, the Oceanspace S2600 does not allow LUN level disk RAID configuration. The RAID Group that is configured with however many disks carries the RAID level.

This contrasts with the Promise VTrak’s ability to create a Disk Array and then set up whatever RAID level for each LUN we may set up in that Disk Array.

The cost is some storage space where RAID 1 would be specified for certain LUNs that would host low I/O needs such as RDS or SQL host OSs.

However, that would be balanced out with the fact that running RAID 10 underneath everything means that every LUN on the Oceanspace S2600 would receive the benefit of higher throughput.

Management Console

The Promise VTrak’s Web based management console is quite feature complex relative to the Oceanspace S2600’s console.

The plus side for the Oceanspace S2600 console is that it was quite simple to pick up on how to set up storage for our needs with very little reading needing to be done (primarily to get to know the nomenclature).

In the end, preference for one console over another is subjective. In both cases, the respective vendor’s Management Console gets the job done and that is what is most important to us.

MPIO Setup

It took a couple of reboots after removing any MPIO device references, then running:

- mpclaim –n –i –a (reboot)

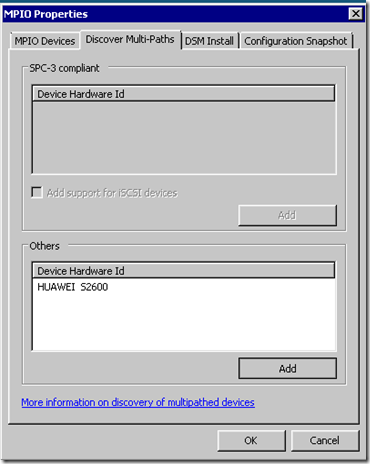

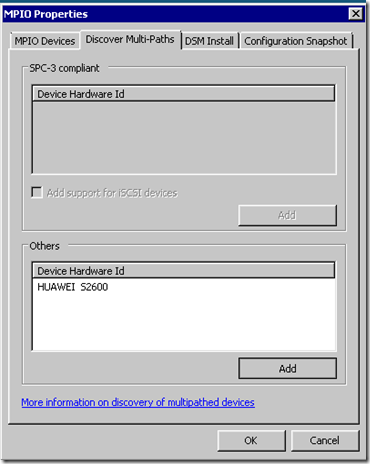

Finally, in MPIOCPL.exe we saw the following on Node-01:

We then saw:

After a minute or two we finally had:

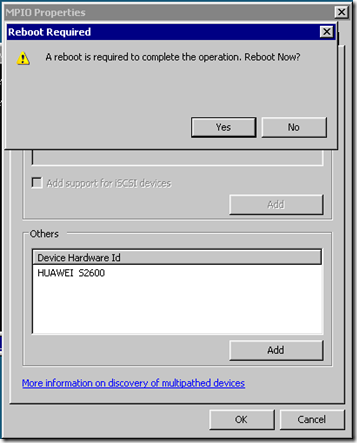

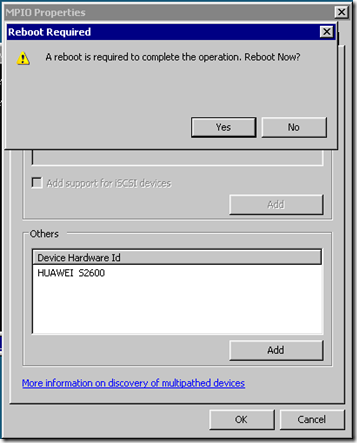

One reboot later:

But, our second node was still blank:

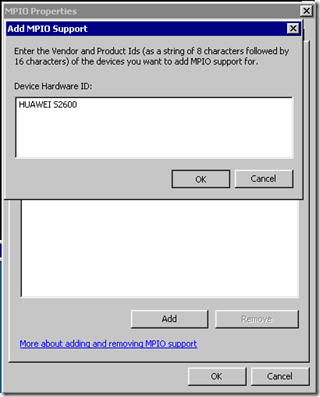

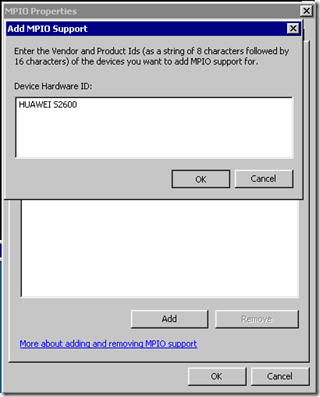

We manually added the Device Hardware ID as per the other node:

After a reboot and another mpclaim –n –i –a we finally had doubles of everything! One reboot later and we finally had our shared storage configured and ready for the Failover Cluster Manager’s Cluster Validation Wizard!

Long story short we had a lot of problems getting one of our nodes (they are identical all the way through) to recognize both SAS paths to the Oceanspace S2600.

Philip Elder

MPECS Inc.

Microsoft Small Business Specialists

Co-Author: SBS 2008 Blueprint Book

*Our original iMac was stolen (previous blog post). We now have a new MacBook Pro courtesy of Vlad Mazek, owner of OWN.