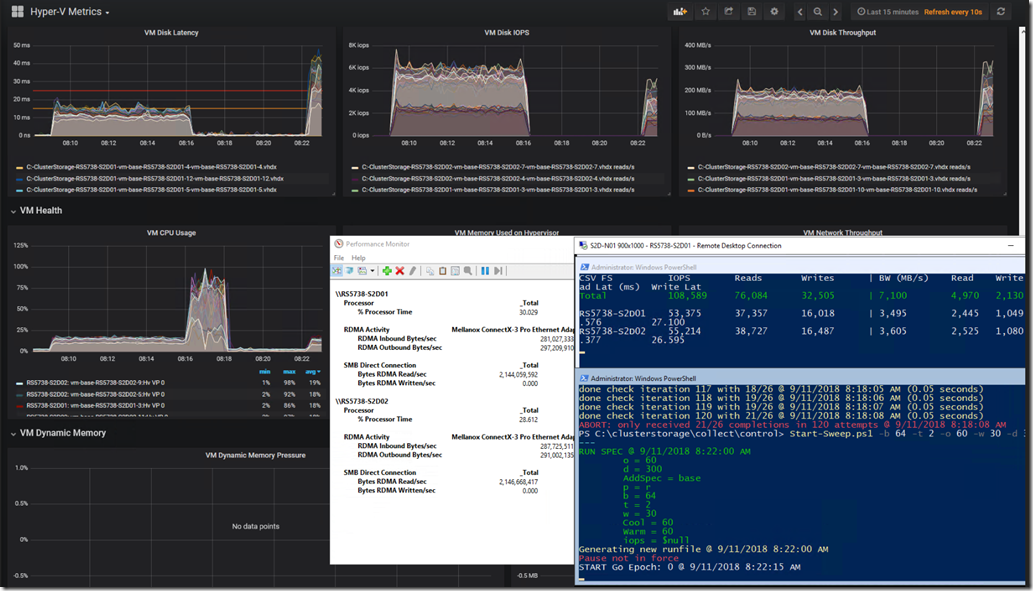

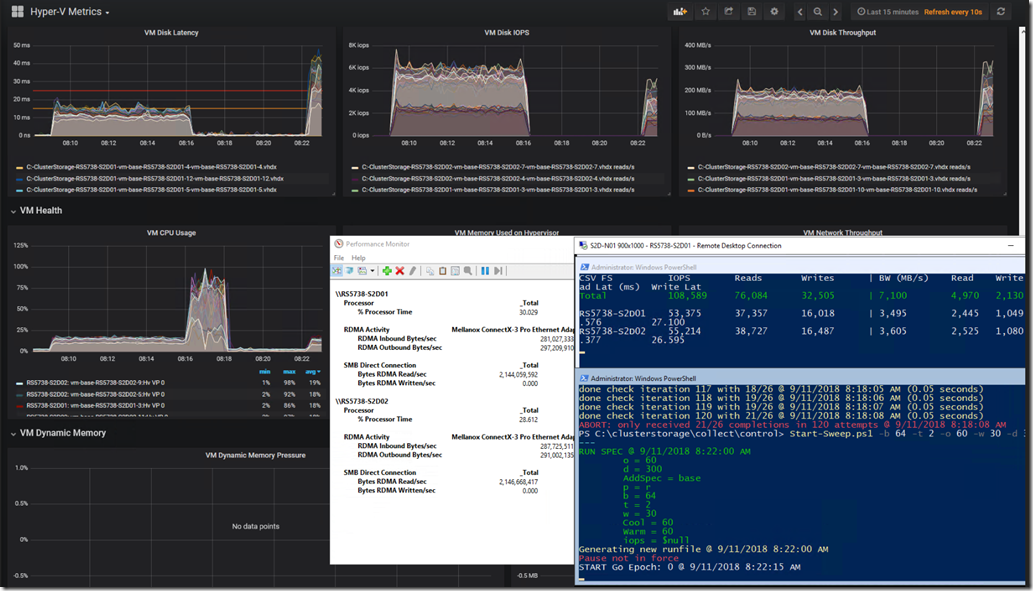

We've been doing _a lot_ of work setting up a Grafana/InfluxDB/Telegraph monitoring and history system lately.

The following is our custom Kepler-64 Storage Spaces Direct 2-node cluster being tracked in a Grafana Dashboard that we've customized:

Grafana graphs, PerfMon RoCE RDMA Monitoring, and VMFleet Watch-AllCluster.PS1 (our mod)

Needless to say, a substantial number of links to various sites about running the above setup on both Windows and Ubuntu were lost after something seemingly went wrong. :(

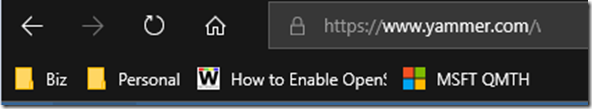

Edge (Sync ?) Hiccups then Pukes

As we were quite busy throughout the day and Edge was being very uncooperative we started using Firefox for most of the browsing throughout the day.

Edge: Favourites Bar Shortcut Count Drastically Trimmed

The first response once things started misbehaving should have been was to use the Edge Favourites Backup feature and get them out!

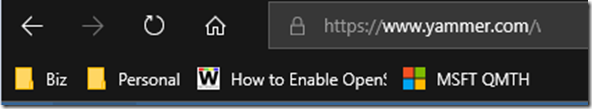

When we opened Edge later in the day this is what we were greeted with:

Edge: Favourites Bar Shortcuts Gone!

As a small caveat one of the behaviours with Edge has been for it to either go unresponsive requiring a Task Manager Kill or when another Edge browser session was opened for it to not allow Paste or Right Click in the Address Bar or any Favourites, History, or other buttons to be shown.

So, Task Manager Kill? Nope. They were not there.

Log off and back on again? Nope.

Reboot the machine? Nope.

Both the Favourites Bar content and _all_ of our Favourites were gone.

Back Them Up!

During the above day when things started to misbehave the next step _should have been_ to grab one of the tablets they were syncing to and run a backup process without allowing the tablet to connect to WiFi and sync! Ugh, hindsight is 20/20. :P

And, much like the advice we always start out with when training users on the use of Office products the very first step they need to take is to _save_ their work before doing anything! And, once the work is done, to _save_ their work. Well, that advice is something that we will be taking from now on with regards to Edge and Favourites.

After a big day of Favourites building a backup should be taken.

So, Where Are Those Favourites?

Why, oh why do software vendors move my cheese?

In this case, the original location for those Favourites when using IE back in the day was OneDrive. If there was a hiccup somewhere between the number of different clients OneDrive Sync would append the name of the machine to the conflicting shortcuts and we'd be left with doing a quick Search & Destroy post mortem. No biggie. Not so with Edge.

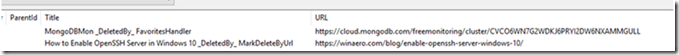

Thanks to Michael B. Smith via a list we were pointed to:

The location that Edge stores those favourites is here:

- Stored under %LOCALAPPDATA%\Packages\Microsoft.MicrosoftEdge_8wekyb3d8bbwe\AC\MicrosoftEdge\User\Default\DataStore\Data\nouser1\120712-0049\DBStore

- Database Name: Spartan.edb

Change the somewhat hidden dropdown to Favourites and:

Some Edge Favourites Post Backup Import

What Does All This Mean? Edge Bug

It means that there's a serious bug somewhere in the Edge setup with Data Loss being one possible result.

It means that, for now one needs to run the Edge Export process to back those Favourites up after a serious day of adding to that list!

- In Edge click the Favourites/History/Downloads button

- Click the Favourites Star if they are not shown as above

- Click the Gear

- Click the Import from another browser button

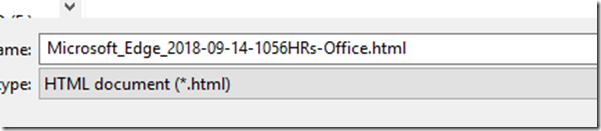

- Click the Export to file button

- Choose a location and give the file a name

- We drop ours in OneDrive to keep it backed up

- Naming convention: DATE-TIME-Location.HTML

The above process will at least help mitigate any choke in the Edge Favourites setup that may happen.

Warning Note

IMPORTANT NOTE: Edge does not have any kind of parsing structure for the import process.

We cannot pick and choose what to import, and, if there are still Favourites _in the database_ they may disappear/be deleted when importing!

If the bulk of the Favourites are still there then an alternative to a wholesale import would be to open the backup .HTML page and click on the needed links and Favourite them again. *sigh*

Conclusion

What does all of this mean?

Considering that we've lost data there's a very serious problem here. In our case, we're talking about a very long and full day's worth of bookmarks/favourites gone. :(

For now, it means back those favourites up _a lot_ when doing critical work that requires knowledge keeping!

Oh, and we need to set aside some time to delve into the NirSoft utility linked to above to see if there are features in there to help mitigate this situation.

Philip Elder

Microsoft High Availability MVP

MPECS Inc.

Co-Author: SBS 2008 Blueprint Book

www.s2d.rocks !

Our Web Site

Our Cloud Service