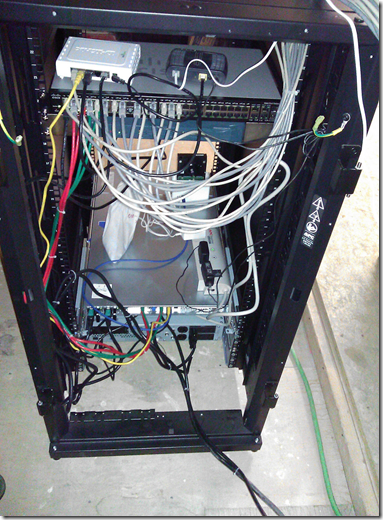

We are in the process of setting up a number of Intel Server System SR1695GPRX2AC units along with our soon to be two Intel Server System R2208GZ4GC units to one Promise VTrak E610sD RAID subsystem.

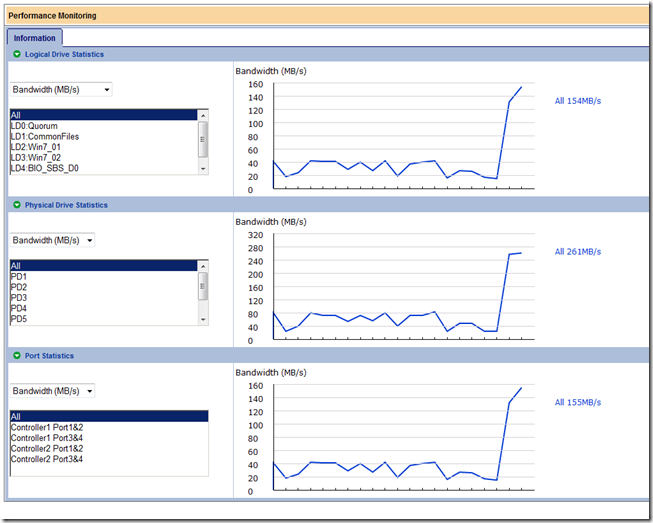

Out of the box we are dealing with 3Gbit/Second SAS connections with the VTrak so we are not able to use the more advanced features the SAS Switch offers.

The LSI SAS6160 Switch compatibility list can be found here:

- LSI SAS6160 Switch Hardware Compatibility List (PDF)

- If the link is broken then the LSI SAS6160 product page is here.

Specifically we are looking for a replacement for the Promise VTrak that will give us access to SAS 6Gbit/Second and the advanced features offered by the SAS Switch.

Now, note that the Promise VTrak E610sD requires firmware 3.36.00. Our current unit is at 3.34.00. So, we are on our way to updating the firmware in the Promise before we can draw any conclusions as far as setting things up.

As far as a replacement for the Promise the first in the above list is actually a NetApp appliance. We will be looking into their products. We have already been in conversations with IBM over their DS3524 dual controller SAS unit so we shall see where that goes.

For now, we are on the road to bringing a very flexible, high performance, and highly redundant hardware solution online to deliver Hyper-V Failover Clusters as well as a Private Cloud solution.

Philip Elder

MPECS Inc.

Microsoft Small Business Specialists

Co-Author: SBS 2008 Blueprint Book

*Our original iMac was stolen (previous blog post). We now have a new MacBook Pro courtesy of Vlad Mazek, owner of OWN.